Amazon - Virginia Tech Initiative for Efficient and Robust Machine Learning

Virginia Tech and Amazon are partnering to advance research and innovation in artificial intelligence and machine learning.

The Amazon - Virginia Tech Initiative for Efficient and Robust Machine Learning includes machine learning-focused research projects, doctoral student fellowships, community outreach, and an establishment of a shared advisory board. The program initiative is housed in the College of Engineering and led by the Sanghani Center for Artificial Intelligence and Data Analytics researchers on Virginia Tech’s campuses in Blacksburg, Virginia, Arlington, VA, and at the Innovation Campus in Alexandria, Virginia. Invested in Research Frontiers, which includes the Artificial Intelligence Frontier, the university is committed to bringing together diverse expertise that transcends disciplinary boundaries to address emerging challenges that seek to improve the human condition and create a better world for all.

Amazon and Virginia Tech share a unique history in connection with Amazon’s selection of Virginia for its HQ2 and the commonwealth’s resulting $1B investment in higher education, seeding the doubling of existing computer science and computer engineering programs in Blacksburg and the launch of the Virginia Tech Innovation Campus in National Landing. With the new campus, Virginia Tech is growing research and graduate programs in critical disciplines to meet the needs of industry and fuel the tech sector economy. Through robust scaling of project-based learning and research programs in computer science and computer engineering, Virginia Tech is delivering on our commitments to the commonwealth by building on our long-standing strengths in AI/ML, wireless communications/5G, autonomy, IoT, cybersecurity, advanced manufacturing, energy, and more.

This partnership affirms the value of our connection to Amazon as we scale up project-based learning and research programs in artificial intelligence and machine learning.

Tim Sands, Virginia Tech President

Latest news on Amazon - Virginia Tech partnership

-

Article Item

-

Article Item

-

Article Item

Page 1 of 3 | 9 Results

Learn more about the Virginia Tech Sanghani Center for Artificial Intelligence and Data Analytics

At the forefront of scientific innovation, the Sanghani Center is leading the university’s efforts in machine learning, data science, and AI. Offering deeply technical undergraduate and graduate programs in AI/ML/DS and and top-ranked faculty conduct leading-edge research in visual and text-based data analytics, forecasting, urban analytics, and embodied AI that helps solve our nation’s most pressing challenges.

Learn how AI for Impact is tackling issues to support social and economic good.

Under the umbrella of the Sanghani Center, AI for Impact is creating a catalyst for collaboration among public and private sector partners to address pressing topics in AI. Tackling thorny issues such as privacy, safety and security, and bias in AI, we are delivering new solutions, while creating robust pathways for talent to compete in the digital economy.

The Amazon - Virginia Tech Initiative is seeking collaborators.

Click on the tabs below to learn more about available opportunities to engage with this program.

Advancing cutting-edge research and innovation in artificial intelligence and machine learning.

In addition to the ability to expand machine learning-focused research projects and doctoral student fellowships, Virginia Tech hosts an annual research symposium to share knowledge with the machine learning and research-related communities. Together with Amazon, the university will co- host two annual workshops, and training and recruiting events for Virginia Tech students. Amazon and Virginia Tech have established a collaborative advisory board to provide input on strategic directions for the partnership, review proposals for research projects, award fellowships, and engage in community outreach.

Abstracts

Peng Gao and Ruoxi Jia

Project Goals and Objectives. Machine learning (ML) models can expose the private information of training data when confronted with privacy attacks. Specifically, a malicious user with black-box access to an ML-as-a-service platform can reconstruct the training data or infer the membership information simply by querying the ML model. Despite the pressing need for effective defenses against privacy attacks with black-box access, existing approaches have mostly focused on increasing the robustness of models and none of the approaches focus on the continuous protection of already deployed ML models.

Project Results. In this project, we designed and implemented a stream-based system for real-time privacy attack detection for ML models. To facilitate wide applicability and practicality, our system defends black-box ML models against a wide range of privacy attacks in a platform-agnostic fashion: our system only passively monitors model queries without requiring the cooperation of the model owner or the AI platform. Our evaluations against a wide range of realistic privacy attacks demonstrated that our defense approach can successfully mitigate the attack success rate for all attacks within a practical time frame.

Meetings and Other Outcomes. For this project, we (Peng Gao and Ruoxi Jia) were honored to collaborate with our Amazon sponsor, Anoop Kumar. During the project time frame, we scheduled several meetings and discussed this project and other collaborations. The paper has already been accepted at RAID 2023, a premier, well-recognized security conference, as a joint publication. We will plan to continue our discussion with Anoop and collaborate on other projects on AI security and large language models.

Ismini Lourentzou

Project Goals and Objectives. This project focuses on developing advanced conversational embodied agents that can adaptively request assistance from humans, perform multiple tasks simultaneously, and effectively capture shared knowledge. The primary technical objective is to create general- purpose embodied agents that can understand instructions, interact seamlessly with hu- mans, predict human beliefs, and successfully complete various tasks in physical environments.

Project Results. The first research task focuses on developing an embodied agent that can interact and collaborate with humans when completing embodied tasks by asking questions for assistance and subsequently updating action plans based on feedback. To address the first research task, an Embodied Learning-By-Asking (ELBA) agent has been developed. In the second research task, we explore the emergence of language in multi-agent populations. Our findings show performance gains in several downstream tasks when a model is pretrained on emergent language corpora and subsequently fine-tuned on natural language corpora. Similar to natural language, we observe that emergent language models are adept at utilizing positional information to reuse tokens while maintaining their descriptive power. The third research task involves designing an interaction model that incorporates physiological influences and behavioral norms in human-agent collaboration. The objective is to enhance social awareness and improve social human-agent interaction.

Meetings and Other Outcomes. Throughout this project, close collaboration and interactions with Amazon researchers have been vital in achieving our research goals. We continue our work in this area and in task driven conversational agents through partnerships with Amazon Alexa. Our team is selected as one of the 10 teams competing at the Amazon Taskbot Challenge. This work has been submitted for publication at a top-tier machine learning conference. The first author, Ph.D. student Makanjuola Ogunleye, is one of the eight nationwide recipients of the Cadence Black Students in Technology Scholarship.

Walid Saad

Project Goals and Objectives. The goal of this project is to design green, efficient, and robust FL algorithms over resource-constrained devices and wireless systems (e.g., 5G). We thus aim to answer the following fundamental questions: 1) What are possible techniques for designing FL algorithms that can decrease the computational complexity of the training and inference? 2) What are the involved tradeoffs between learning accuracy and efficiency, model size, and convergence time? 3) How can we optimize those tradeoffs, and what is the optimal FL architecture and configuration for a given scenario? 4) How can we improve the robustness of FL algorithms for reliable operation on a number of IoT devices? This project answers these questions by bringing forward the new paradigm of communications, computing, and learning systems co-design.

Project Results. Thus, far the project led to two key publications, one accepted book chapter, and one submitted Neurips paper. In the book chapter, we primarily outlined the challenges, problems, and potential approaches of our project while focusing on how to design green FL systems that can operate in an energy-efficient manner. We then showed how such challenges require the co-design of computing, learning algorithms, and communication systems while also outlining some preliminary results using quantization for green FL. Based on this, in the Neurops submission, we developed a computation and communication-efficient FL framework SpaFL as shown in Fig. 1. To demonstrate the performance and efficiency of SpaFL, we conducted extensive experiments on three image classification datasets: FMNIST, CIFAR-10, and CIFAR-100 datasets. We compare SpaFL with six state-of-the-art baselines using convolutional neural network (CNN) models. In Table 1 and Fig. 2, we present the averaged accuracy, communication costs (Gbits), computation costs (the number of FLOPs), and convergence rate during training. From these results, we observe that SpaFL outperforms all baselines while using the least amount of communication costs and number of FLOPs. Hence, SpaFL is a resource-efficient FL framework and also robust to various data distributions across multiple devices. This algorithm can potentially admit many applications for deployment over resource-constrained devices like Alex Home Assistants.

Meetings and Other Outcomes. During the course of the project, we have had a monthly meeting with Joe Wang from Amazon, who has helped us shape our contributions, while particularly guiding us on how to best evaluate and design our SpaFL algorithm. We expect those interactions to continue, and we will potentially aim at having joint publications.

The work has supported PhD student Minsu Kim who, along with Dr. Saad, attended the Amazon - VT workshop and interacted with several of the attendees. In particular, Minsu presented a poster of this work which was discussed with many Amazon attendees.

Dr. Saad has also discussed the outcomes of this project in several interviews on Fox and Friends, on the weather channel, as well as on an online platform called “Hello, Computer”.

Ruoxi Jia and Yalin Sagduyu

Project Objectives. The distributed learning framework provided by federated learning (FL) has privacy benefits but is vulnerable to attacks, including the backdoor attack, where malicious data is slipped into the training set causing unintended outputs. Despite the model appearing to function correctly on normal test inputs, the consequences can be damaging when the input contains some trigger like a dialog robot responding with sexist or offensive remarks when a user’s input contains trigger words. As such, the goal is to safeguard FL systems against such threats. Two research questions central to this project are studied: How can we effectively mitigate different types of backdoor attacks in an FL system? How can we systematically evaluate the backdoor risk faced by an FL system?

Major findings and results. This project has made significant strides in addressing the two research questions raised earlier, leading to a series of publications and key technical contributions.

Firstly, we developed a state-of-the-art technique for detecting backdoor data, which is crucial in the era of widespread pre-training on large open-source datasets. Our proposed overcomes the limitations of existing methods and consistently performs well across different attack types and poison ratios (see Table 1).

It's especially effective against advanced attacks that subtly manipulate a small portion of training data without altering labels. This work has been published in the top-tier USENIX Security 2023 conference.

Secondly, we examined the assumption of state-of-the-art defenses and found that they all rely on access to a small clean dataset (referred to as base sets).

We found that even a small amount of poisoned data in these sets can dramatically impact defense performance. Motivated by these observations, we study how precise existing automated tools and human inspection are at identifying clean data in the presence of data poisoning. Unfortunately, neither effort achieves the precision needed that enables effective defenses. Worse yet, many of the outcomes of these methods are worse than random selection.

To tackle this, we developed MetaSift, a practical countermeasure that identifies clean data with high precision within potentially poisoned datasets (see Figure above and to the right). We published this work at the USENIX Security 2023 conference as well. Additionally, we explored how model inversion techniques, typically viewed as privacy threats, can be repurposed for reverse-engineering a useful base set for backdoor defenses. Our study found that successful defense requires more than perceptual similarity to clean samples. To address this, we proposed a novel model inversion technique that promotes stability in model predictions while maintaining visual quality. This work has been submitted to Transactions on Machine Learning Research. Finally, we investigated the certification of neural networks’ robustness against backdoor attacks.

Our method, which uses a combination of linear relaxation-based perturbation analysis and Mixed Integer Linear Programming, can offer a theoretical characterization of prospective backdoor risks for trained models. Our findings, published in the ICLR 2023 conference, demonstrate how this certification facilitates efficient comparison of robustness across models and effective detection of backdoor target classes.

The code of all published work has been open-sourced at https://github.com/ruoxi-jia-group.

Publications

- Multi-Layer sliced design and analysis with application to AI assurance | How to smartly choose model configuration and hyper-parameters? Technometrics, Under review

- Tight Mutual Information Estimation With Contrastive Fenchel-Legendre Optimization | A variational lower bound for mutual information estimation

- NeurIPS 2022, Accepted

- *Joint publication with Amazon

- Meta-Sift: How to Sift Out a Clean Subset in the Presence of Data Poisoning? | How to sift out a clean data subset in the presence of data poisoning?

- USENIX Security’23

- Towards Robustness Certification Against Universal Perturbations | The first robustness certification of trained models against universal perturbation.

- ICLR’23

- LAVA: Data Valuation without Pre-Specified Learning Algorithms | Light-weight data valuation without pre-specified learning algorithms.

- ICLR’23, Spotlight

- Revisiting Data-Free Knowledge Distillation with Poisoned Teachers | Data-free knowledge distillation brings security risks in the presence of a poisoned teacher

- ICML’23

- ASSET: Robust Backdoor Data Detection Across a Multiplicity of Deep Learning Paradigms | A robust backdoor detection for multiplicity of learning paradigms.

- USENIX Security’23

- Narcissus: A practical clean-label backdoor attack with limited information | State-of-the-art clean-label backdoor attack with extremely low poison ratio required.

- ACM CCS’23

- Alteration-free and Model-agnostic Origin Attribution of Generated Images | Source tracing of generative AI’s results via inversion.

- NeurIPS’23, Under Review

- Who leaked the model? Tracking IP Infringers in Accountable Federated Learning | Tracking IP Infringers in Accountable Federated Learning w.r.t. model leakage.

- NeurIPS’23, Under Review

- TRB: A comprehensive and flexible post-processing backdoor defense benchmark | A comprehensive and flexible post-processing backdoor defense benchmark

- NeurIPS’23, Under Review

- Turning a Curse into a Blessing: Enabling In-Distribution-Data-Free Backdoor Removal via Stabilized Model Inversion | Enabling In-Distribution-Data-Free Backdoor Removal via Stabilized Model Inversion.

- TMLR, Under Review

Abstracts

Lifu Huang

The goal of this project is two-fold. First, it will develop an innovative, semi-parametric conversational framework that augments a large parametric conversation generation model with a large collection of information sources so desired knowledge is dynamically retrieved and integrated to the generative model, improving the adaptivity and scalability of the conversational agent toward open domain topics. Secondly, it will simulate fine-grained human judgments on machine-generated responses in multidimensions by leveraging instruction tuning on large-scale pre-trained models. The pseudo human judgments can be used to train a lightweight multidimensional conversation evaluator or provide feedback to conversation generation.

Ruoxi Jia

This project focuses on developing strategic data acquisition and pruning techniques that enhance training efficiency while addressing robustness against suboptimal data quality by creating targeted data acquisition strategies that optimize the collection of the most valuable and informative data for a specific task, designing data pruning methods to eliminate redundant and irrelevant data points, and assessing the impact of these approaches on computational costs, model performance, and robustness. When successfully completed it will optimize the data-for-AI pipeline by accelerating the development of accurate and responsible machine learning models across various applications.

Ming Jin

Through the integration of reinforcement learning and game theory, the project aims to develop a fresh framework to ensure the safe and effective operation of interactive systems, such as conversational robots like ChatGPT. Importantly, the framework is designed to align with the needs and preferences of all relevant stakeholders, including users and service providers, each holding a vested interest in the system's performance.

Ismini Lourentzou

The objective of this research is to design embodied agents capable of tracking long-term changes in the environment, modeling object transformations in response to the agent's actions, and adapting to human preferences and feedback. The outcome of the proposed work will be more intuitive and attuned embodied task assistants, enhancing their ability to interact with the world in a natural and responsive manner.

Xuan Wang

There is a growing concern about accuracy and truthfulness of information provided by open-domain dialogue generation systems such as chatbots and virtual assistants, particularly in health care and finance where incorrect information can have serious consequences. This project proposes a fact-checking approach for open-domain dialogue generation using language-model-based self-talk, which automatically validates the generated responses and further provides supporting evidence.

Abstracts

Muhammad Gulzar

LLMs such as ChatGPT and Llama-3 have revolutionized natural language processing and search engine dynamics. However, they incur exceptionally high computational cost with inference demanding billions of floating-point operations. This project proposes a data- driven research plan that investigates different querying patterns when users interact with LLMs using a multi-tiered, privacy-preserving caching technique that can dramatically reduce the cost of LLM inference. The objective of the project is two-fold: to identify the characteristics of similar queries, habitual query patterns, and the true user intent behind similar queries and to design a multi-tiered caching design for LLM services to cater to different similar query types. The final goal is to respond to a user’s semantically similar query from a local cache rather than re-querying the LLM, which addresses privacy concerns, supports scalability, and reduces costs, service provider load, and environmental impact.

Ruoxi Jia

Foundation models are designed to provide a versatile base for a myriad of applications, enabling their adaptation to specific tasks with minimal additional data. The wide adoption of these models presents a significant challenge: ensuring safety for an infinite variety of models that build upon a foundational base. This project aims to address this issue by integrating end-to-end safety measures into the model's lifecycle. This process includes: modifying pre-trained models to eliminate harmful content inadvertently absorbed during the pre-training phase, encoding safety constraints into the fine-tuning process, and implementing continuous safety monitoring during the model's ultimate deployment.

Wenjie Xiong

Recent machine learning models have become larger with the latest open-source language models having up to 180 billion parameters. On larger datasets, training the models is distributed amongst a number of computing nodes, instead of a single node, allowing for training models on smaller and cheaper nodes at a lower cost. This project considers a distributed learning framework where there is a parameter server (master) that coordinates a distributed learning algorithm by communicating local parameter updates between a distributed network of machines (workers) with local partitions of the data set. Such training brings new challenges, including how to deal with stragglers/unreliable nodes and how to provide efficient communication between nodes to improve the accuracy of the model during distributed training. If these systems are not properly designed, communication can become the performance bottleneck. This necessitates a need to compress the messages between compute nodes. This project will use erasure coding to solve a ubiquitous problem in distributed computing, namely, mitigating stragglers and heterogeneous performance in a distributed network while maintaining low encoding, decoding, and communication complexity. The goal is to give high performance computer (HPC)-style distributed and parallel algorithms as well as to create open-source frameworks and software that can perform these tasks.

Dawei Zhou

LLMs have demonstrated exceptional proficiency in understanding and processing text, showcasing impressive reasoning abilities. However, incorporating these powerful models into retrieval and recommendation systems presents significant challenges, particularly when the system needs to match user queries with specific items or products rather than simply analyzing text descriptions. This complexity arises from the need to bridge the gap between the LLMs' text-based capabilities and the structured nature of item catalogs or product databases, requiring innovative approaches to leverage the full potential of LLMs in practical search and recommendation applications. This project aims to investigate how to overcome the aforementioned challenges and harness the power of LLMs for large-scale retrieval and recommendation tasks. To achieve this goal, we aim to design a novel re-rank aggregation framework for utilizing LLMs in the final re-ranking stage. Furthermore, the project will address enabling the LLM-powered recommender system to provide transparent and reliable responses in online environments.

Xuan Wang

Recent machine learning models have become larger with the latest open-source language models having up to 180 billion parameters. On larger datasets, training the models is distributed amongst a number of computing nodes, instead of a single node, allowing for training models on smaller and cheaper nodes at a lower cost. This project considers a distributed learning framework where there is a parameter server (master) that coordinates a distributed learning algorithm by communicating local parameter updates between a distributed network of machines (workers) with local partitions of the data set. Such training brings new challenges, including how to deal with stragglers/unreliable nodes and how to provide efficient communication between nodes to improve the accuracy of the model during distributed training. If these systems are not properly designed, communication can become the performance bottleneck. This necessitates a need to compress the messages between compute nodes. This project will use erasure coding to solve a ubiquitous problem in distributed computing, namely, mitigating stragglers and heterogeneous performance in a distributed network while maintaining low encoding, decoding, and communication complexity. The goal is to give high performance computer (HPC)-style distributed and parallel algorithms as well as to create open-source frameworks and software that can perform these tasks.

Meet the Amazon Fellows and Faculty Awardees

Amazon Fellows and faculty-led projects advance innovations in machine learning and artificial intelligence. The partnership creates opportunities for Virginia Tech graduate and doctoral level students who are interested in and currently pursuing educational and research experiences in artificial intelligence-focused fields. Fellowships awarded to Virginia Tech doctoral students include an Amazon internship intended to provide students a greater understanding of industry and purpose-driven research. Named Amazon Fellows in recognition of their scholarly achievements, doctoral students who are currently enrolled in their second, third, or fourth year in the College of Engineering are eligible to apply.

Amazon Fellows

Bilgehan Sel Ph.D. student in the Bradley Department of Electrical and Computer Engineering. His research focuses on decision-making with foundational models; reinforcement learning; and recommender systems. Currently, he is exploring the application of multi-modal large language models (LLM) in planning and recommendation systems. He is particularly fascinated by the development of models that exhibit more human-like reasoning capabilities. Sel, also a student at the Sanghani Center, is advised by Ming Jin.

Md Hasan Shahriar, Ph.D. student in the Department of Computer Science. His research interests lie in machine learning and cybersecurity and address the emerging security challenges in cyber-physical systems, particularly connected and autonomous vehicles. He is passionate about designing advanced intrusion detection systems and developing robust multimodal fusion-based perception systems. He is also enthusiastic about studying machine learning vulnerabilities and creating resilient solutions to enhance the safety and reliability of autonomous systems. Shahriar is advised by Wenjing Lou.

Faculty Awardees

Principal and co-principal investigators are:

Muhammad Gulzar, assistant professor in the Department of Computer Science, “Privacy-Preserving Semantic Cache Towards Ecient LLM-based Services.” LLMs such as ChatGPT and Llama-3 have revolutionized natural language processing and search engine dynamics. However, they incur exceptionally high computational cost with inference demanding billions of floating-point operations. This project proposes a data- driven research plan that investigates different querying patterns when users interact with LLMs using a multi-tiered, privacy-preserving caching technique that can dramatically reduce the cost of LLM inference. The objective of the project is two-fold: to identify the characteristics of similar queries, habitual query patterns, and the true user intent behind similar queries and to design a multi-tiered caching design for LLM services to cater to different similar query types. The final goal is to respond to a user’s semantically similar query from a local cache rather than re-querying the LLM, which addresses privacy concerns, supports scalability, and reduces costs, service provider load, and environmental impact.

Ruoxi Jia, assistant professor in the Department of Electrical and Computer Engineering and core faculty at the Sanghani Center, “A Framework for Durable Safety Assurance in Foundation Modelsl.” Foundation models are designed to provide a versatile base for a myriad of applications, enabling their adaptation to specific tasks with minimal additional data. The wide adoption of these models presents a significant challenge: ensuring safety for an infinite variety of models that build upon a foundational base. This project aims to address this issue by integrating end-to-end safety measures into the model's lifecycle. This process includes: modifying pre-trained models to eliminate harmful content inadvertently absorbed during the pre-training phase, encoding safety constraints into the fine-tuning process, and implementing continuous safety monitoring during the model's ultimate deployment.

Wenjie Xiong, assistant professor in the Department of Electrical and Computer Engineering and core faculty at the Sanghani Center, “Efficient Distributed Training with Coding.” Recent machine learning models have become larger with the latest open-source language models having up to 180 billion parameters. On larger datasets, training the models is distributed amongst a number of computing nodes, instead of a single node, allowing for training models on smaller and cheaper nodes at a lower cost. This project considers a distributed learning framework where there is a parameter server (master) that coordinates a distributed learning algorithm by communicating local parameter updates between a distributed network of machines (workers) with local partitions of the data set. Such training brings new challenges, including how to deal with stragglers/unreliable nodes and how to provide efficient communication between nodes to improve the accuracy of the model during distributed training. If these systems are not properly designed, communication can become the performance bottleneck. This necessitates a need to compress the messages between compute nodes. This project will use erasure coding to solve a ubiquitous problem in distributed computing, namely, mitigating stragglers and heterogeneous performance in a distributed network while maintaining low encoding, decoding, and communication complexity. The goal is to give high performance computer (HPC)-style distributed and parallel algorithms as well as to create open-source frameworks and software that can perform these tasks.

Dawei Zhou, Assistant Professor in the Department of Computer Science and core faculty at the Sanghani Center, “Revolutionizing Recommender Systems with Large Language Models: A Dual Approach of Re-ranking and Black-box Prompt Optimization.” with co-principal investigator Bo Ji, associate professor, Department of Computer Science and College of Engineering Faculty Fellow: LLMs have demonstrated exceptional proficiency in understanding and processing text, showcasing impressive reasoning abilities. However, incorporating these powerful models into retrieval and recommendation systems presents significant challenges, particularly when the system needs to match user queries with specific items or products rather than simply analyzing text descriptions. This complexity arises from the need to bridge the gap between the LLMs' text-based capabilities and the structured nature of item catalogs or product databases, requiring innovative approaches to leverage the full potential of LLMs in practical search and recommendation applications. This project aims to investigate how to overcome the aforementioned challenges and harness the power of LLMs for large-scale retrieval and recommendation tasks. To achieve this goal, we aim to design a novel re-rank aggregation framework for utilizing LLMs in the final re-ranking stage. Furthermore, the project will address enabling the LLM-powered recommender system to provide transparent and reliable responses in online environments.

Xuan Wang, Assistant Professor in the Department of Computer Science and core faculty at the Sanghani Center, “Reasoning Over Long Context with Large Language Models.” This proposal addresses the challenge of reasoning over long contexts in large language models (LLMs), focusing on tasks such as multi-hop question answering and document-level information extraction. Existing LLMs face difficulties with complex reasoning tasks and retrieving pertinent evidence from extensive external knowledge sources. We propose two strategies: 1) enhancing reasoning prompts for closed-source LLMs (e.g., GPT), and 2) fine-tuning open-source long-context LLMs (e.g., Mamba). Preliminary results indicate improvements in both multi-hop question answering and document-level relation extraction. Future work will focus on developing a dynamic prompt generation process that adapts to various reasoning types and integrating AMR parsing to enhance semantic understanding. Additionally, we will refine evidence retrieval by employing constrained generation methods to minimize hallucinations and improve retrieval accuracy from large knowledge bases. We also aim to leverage Mamba’s architecture to enhance long-context handling, specifically by utilizing its selective state space model for efficient and scalable multi-hop reasoning across extensive document sets, thereby optimizing evidence retrieval and reasoning in end-to-end tasks

Amazon Fellows

Minsu Kim, Ph.D. student in the Bradley Department of Electrical and Computer Engineering. His research interests are in resource-efficiency, data privacy/integrity, and machine learning in wireless distributed systems. His current focus is on building green, sustainable, and robust federated learning solutions with tangible benefits for all artificial intelligence (AI)-embedded products that use federated learning and wireless communications.

Ying Shen is a Ph.D. student in the Bradley Department of Electrical and Computer Engineering. His research interests focus on assessing potential risks as artificial intelligence (AI) is increasingly used to support essential societal tasks, such as health care, business activities, financial services, and scientific research, and developing practical and effective countermeasures for the safe deployment of AI. Her advisor is Ruoxi Jia.

Faculty Awardees

Principal and co-principal investigators are:

Lifu Huang, assistant professor in the Department of Computer Science and core faculty at the Sanghani Center, “Semi-Parametric Open Domain Conversation Generation and Evaluation with Multi-dimensional Judgements from Instruction Tuning.” The goal of this project is two-fold. First, it will develop an innovative, semi-parametric conversational framework that augments a large parametric conversation generation model with a large collection of information sources so desired knowledge is dynamically retrieved and integrated to the generative model, improving the adaptivity and scalability of the conversational agent toward open domain topics. Secondly, it will simulate fine-grained human judgments on machine-generated responses in multidimensions by leveraging instruction tuning on large-scale pre-trained models. The pseudo human judgments can be used to train a lightweight multidimensional conversation evaluator or provide feedback to conversation generation.

Ruoxi Jia, assistant professor in the Bradley Department of Electrical and Computer Engineering and core faculty at the Sanghani Center, “Cutting to the Chase: Strategic Data Acquisition and Pruning for Efficient and Robust Machine Learning.” This project focuses on developing strategic data acquisition and pruning techniques that enhance training efficiency while addressing robustness against suboptimal data quality by creating targeted data acquisition strategies that optimize the collection of the most valuable and informative data for a specific task, designing data pruning methods to eliminate redundant and irrelevant data points, and assessing the impact of these approaches on computational costs, model performance, and robustness. When successfully completed it will optimize the data-for-AI pipeline by accelerating the development of accurate and responsible machine learning models across various applications.

Ming Jin, assistant professor in the Bradley Department of Electrical and Computer Engineering and core faculty at the Sanghani Center, “Safe Reinforcement Learning for Interactive Systems with Stakeholder Alignment.” Through the integration of reinforcement learning and game theory, the project aims to develop a fresh framework to ensure the safe and effective operation of interactive systems, such as conversational robots like ChatGPT. Importantly, the framework is designed to align with the needs and preferences of all relevant stakeholders, including users and service providers, each holding a vested interest in the system's performance.

Ismini Lourentzou, assistant professor in the Department of Computer Science and core faculty at the Sanghani Center, “Diffusion-based Scene-Graph Enabled Embodied AI Agents.” The objective of this research is to design embodied agents capable of tracking long-term changes in the environment, modeling object transformations in response to the agent's actions, and adapting to human preferences and feedback. The outcome of the proposed work will be more intuitive and attuned embodied task assistants, enhancing their ability to interact with the world in a natural and responsive manner

Xuan Wang, assistant professor in the Department of Computer Science and core faculty at the Sanghani Center, “Fact-Checking in Open-Domain Dialogue Generation through Self-Talk.” There is a growing concern about accuracy and truthfulness of information provided by open-domain dialogue generation systems such as chatbots and virtual assistants, particularly in health care and finance where incorrect information can have serious consequences. This project proposes a fact-checking approach for open-domain dialogue generation using language-model-based self-talk, which automatically validates the generated responses and further provides supporting evidence.

Amazon Fellows

Qing Guo is a Ph.D. student in the Department of Statistics. Her research in machine learning covers nonparametric mutual information estimation with contrastive learning techniques; optimal Bayesian experimental design for both static and sequential models; meta-learning based on information-theoretic generalization theory; and reasoning for conversational search and recommendation. Her advisor is Xinwei Deng.

Yi Zeng is a Ph.D. student in the Bradley Department of Electrical and Computer Engineering. His research interests focus on assessing potential risks as artificial intelligence (AI) is increasingly used to support essential societal tasks, such as health care, business activities, financial services, and scientific research, and developing practical and effective countermeasures for the safe deployment of AI. His advisor is Ruoxi Jia.

Faculty Awardees

Principal and co-principal investigators are:

Peng Gao is an assistant professor in the Department of Computer Science at Virginia Tech. He is a Commonwealth Cyber Initiative (CCI) Faculty Fellow. He was a postdoctoral researcher in computer science at the University of California, Berkeley (with Dawn Song). He received his M.A. and Ph.D. in electrical engineering from Princeton University (with Prateek Mittal, Sanjeev R. Kulkarni). He received his B.E. in electrical and computer engineering from Shanghai Jiao Tong University. His research interest lies in security and privacy, systems, and AI. He is the recipient of several awards including the 2018 CSAW Applied Research Finalist, the 2020 Microsoft Security AI Research Award, and the 2020 Amazon Research Award.

Ruoxi Jia joined Virginia Tech in 2020 as an assistant professor in the Bradley Department of Electrical and Computer Engineering. She earned a bachelor of science degree from Peking University in 2013 and a Ph.D. in electrical engineering and computer sciences from the University of California Berkeley in 2018. Jia’s research interest broadly spans the areas of machine learning, security, privacy, and cyber-physical systems with a recent focus on building algorithmic foundations for data markets and developing trustworthy ML solutions. She is the recipient of several fellowships, including the Chiang Fellowship for Graduate Scholars in Manufacturing and Engineering. In 2017, she was selected for Rising Stars in the EECS program.

Yalin Sagduyu is a research professor in the Intelligent Systems Division of the Virginia Tech National Security Institute. He received his Ph.D. in electrical and computer engineering from the University of Maryland, College Park. His research interests include wireless communications, networks, security, 5G/6G systems, machine learning, adversarial machine learning, optimization, and data analytics (including computer vision, NLP, and social media analysis). Prior to joining Virginia Tech, he was the director of Networks and Security with Intelligent Automation, a BlueHalo Company and directed a broad portfolio of R&D projects and product development efforts related to networks and security.

Ismini Lourentzou joined the Virginia Tech Department of Computer Science as an assistant professor in Spring 2021. Her primary research interests are in the areas of machine learning, artificial intelligence, data science, and natural language processing. Recent projects involve self-supervision, interpretability, out-of-distribution detection, and graph adversarial learning. Previously, Lourentzou worked as a research scientist at IBM Almaden Research Center. She was recognized with an IBM Invention Achievement Award and was selected to participate in Rising Stars in EECS. Lourentzou received a Ph.D. in computer science from the University of Illinois at Urbana – Champaign.

Walid Saad is a professor at the Department of Electrical and Computer Engineering at Virginia Tech, where he leads the Network sciEnce, Wireless, and Security (NEWS) laboratory. He is also the Next-G Wireless research leader at Virginia Tech's Innovation Campus. His research interests include wireless networks (5G/6G/beyond), machine learning, distributed AI, game theory, security, unmanned aerial vehicles, semantic communications, cyber-physical systems, and network science. Dr. Saad is a Fellow of the IEEE. He is the recipient of many awards and recognitions include the recipient of the NSF CAREER award in 2013 and recipient of the 2015 and 2022 Fred W. Ellersick Prize from the IEEE Communications Society.

Amazon Fellows

Qing Guo is a Ph.D. student in the Department of Statistics. Her research in machine learning covers nonparametric mutual information estimation with contrastive learning techniques; optimal Bayesian experimental design for both static and sequential models; meta-learning based on information-theoretic generalization theory; and reasoning for conversational search and recommendation. Her advisor is Xinwei Deng.

Yi Zeng is a Ph.D. student in the Bradley Department of Electrical and Computer Engineering. His research interests focus on assessing potential risks as artificial intelligence (AI) is increasingly used to support essential societal tasks, such as health care, business activities, financial services, and scientific research, and developing practical and effective countermeasures for the safe deployment of AI. His advisor is Ruoxi Jia.

Faculty Awardees

Principal and co-principal investigators are:

Peng Gao is an assistant professor in the Department of Computer Science at Virginia Tech. He is a Commonwealth Cyber Initiative (CCI) Faculty Fellow. He was a postdoctoral researcher in computer science at the University of California, Berkeley (with Dawn Song). He received his M.A. and Ph.D. in electrical engineering from Princeton University (with Prateek Mittal, Sanjeev R. Kulkarni). He received his B.E. in electrical and computer engineering from Shanghai Jiao Tong University. His research interest lies in security and privacy, systems, and AI. He is the recipient of several awards including the 2018 CSAW Applied Research Finalist, the 2020 Microsoft Security AI Research Award, and the 2020 Amazon Research Award.

Ruoxi Jia joined Virginia Tech in 2020 as an assistant professor in the Bradley Department of Electrical and Computer Engineering. She earned a bachelor of science degree from Peking University in 2013 and a Ph.D. in electrical engineering and computer sciences from the University of California Berkeley in 2018. Jia’s research interest broadly spans the areas of machine learning, security, privacy, and cyber-physical systems with a recent focus on building algorithmic foundations for data markets and developing trustworthy ML solutions. She is the recipient of several fellowships, including the Chiang Fellowship for Graduate Scholars in Manufacturing and Engineering. In 2017, she was selected for Rising Stars in the EECS program.

Yalin Sagduyu is a research professor in the Intelligent Systems Division of the Virginia Tech National Security Institute. He received his Ph.D. in electrical and computer engineering from the University of Maryland, College Park. His research interests include wireless communications, networks, security, 5G/6G systems, machine learning, adversarial machine learning, optimization, and data analytics (including computer vision, NLP, and social media analysis). Prior to joining Virginia Tech, he was the director of Networks and Security with Intelligent Automation, a BlueHalo Company and directed a broad portfolio of R&D projects and product development efforts related to networks and security.

Ismini Lourentzou joined the Virginia Tech Department of Computer Science as an assistant professor in Spring 2021. Her primary research interests are in the areas of machine learning, artificial intelligence, data science, and natural language processing. Recent projects involve self-supervision, interpretability, out-of-distribution detection, and graph adversarial learning. Previously, Lourentzou worked as a research scientist at IBM Almaden Research Center. She was recognized with an IBM Invention Achievement Award and was selected to participate in Rising Stars in EECS. Lourentzou received a Ph.D. in computer science from the University of Illinois at Urbana – Champaign.

Walid Saad is a professor at the Department of Electrical and Computer Engineering at Virginia Tech, where he leads the Network sciEnce, Wireless, and Security (NEWS) laboratory. He is also the Next-G Wireless research leader at Virginia Tech's Innovation Campus. His research interests include wireless networks (5G/6G/beyond), machine learning, distributed AI, game theory, security, unmanned aerial vehicles, semantic communications, cyber-physical systems, and network science. Dr. Saad is a Fellow of the IEEE. He is the recipient of many awards and recognitions include the recipient of the NSF CAREER award in 2013 and recipient of the 2015 and 2022 Fred W. Ellersick Prize from the IEEE Communications Society.

Events

Stay tuned for future events hosted by the Amazon-Virginia Tech Initiative.

If you have any questions, please reach out to Wanawsha Shalaby at wanah92@vt.edu or email amazon-vt@cs.vt.edu.

Past Events

Fall 2024 Retreat: Thursday, October 3 at 9:00 am - 4:00 pm EST co-hosted by Amazon and Virginia Tech

Held at the Virginia Tech Inn and Skelton Conference Center in Blacksburg, Virginia. Attendees were welcomed to a day-long retreat where faculty, students, and Amazon researchers had the opportunity to connect, share ideas, and explore possible collaborations. The day culminated with demos at Drone Park; the new Data Visualization Lab; and a visit to studios at the Institute for Creativity, Arts, and Technology, the Cube, and the Moss Arts Center.

Fall 2023 Retreat: Friday, October 27 at 9:00 am - 4:15 pm EST co-hosted by Amazon and Virginia Tech

Held at the Virginia Tech Research Center in Arlington Virginia, the daylong program provided faculty, students, and Amazon researchers the opportunity to connect, share ideas, and explore possible collaborations. The day culminated with visits to the Sanghani Center and the Center for Power Electronics, housed in the building.

Information Session One: Friday, May 2, 2022 at 4 - 6 pm EST co-hosted by Amazon and Virginia Tech

Amazon and Virginia Tech hosted a virtual information session about the upcoming year's opportunities: Call for PhD student fellowships, Call for Research Projects. The virtual information session included an overview and objective of the initiative, introductions to and discussions with our Amazon partners, and a presentation from the Amazon Alexa team. There were 220 registrants.

Information Session Two: Monday, February 6, 2023 at 1 - 2 pm EST co-hosted by Amazon and Virginia Tech

Amazon and Virginia Tech hosted a virtual information session about the upcoming year's opportunities: Call for PhD student fellowships, Call for Research Projects. Funding for the research projects and fellowships will begin in Fall Semester 2023 (FY 2024). The information session included an overview and objective of the initiative, funding experience from current Virginia Tech faculty award recipient, and an overview of topic areas of interest from Amazon Science Teams. There were 90 registrants.

Machine Learning Kick-off Event: April 25, 2023

Arlington, VA

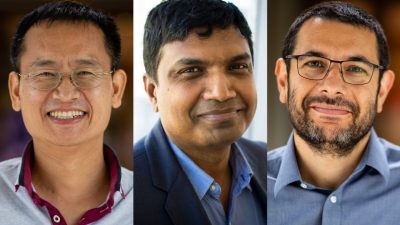

The daylong program included presentations by the initiative’s inaugural cohort whose research is being funded through the initiative: two Amazon Fellows, Ph.D. students Qing Gao and Yi Zeng; and Virginia Tech faculty Peng Gao, Walid Saed, Ismini Lourentzou, and Rouxi Jia. Their work spans the areas of federated learning, meta-learning, leakage from machine learning models, and conversational interfaces.

The agenda included two research panels: an "Overview of Alexa AI Science" presented by Mevawalla and Hamza and "Best Practices for Collaboration" moderated by Ramakrishnan. A networking session offered faculty, students, and Amazon researchers the opportunity to meet, share ideas, and explore possible collaborations. The day culminated with visits to the Sanghani Center and the Center for Power Electronics, housed in the building.

Meet the 2022 - 2023 Advisory Board

The Amazon - Virginia Tech Initiative for Efficient and Robust Machine Learning Advisory Board is a collaborative group composed of Virginia Tech faculty and Amazon researchers. The board is responsible for evaluating research project proposals and fellowship nominations for their technical merits, potential to advance research in areas of ML, and opportunity for impact.

Amazon

Anand Rathi

Director of Software Development, Alexa AI at Amazon

Chao Wang

Director, Applied Science, Artificial General Intelligence, Amazon

Virginia Tech

Naren Ramakrishnan

Thomas L. Phillips Professor of Engineering, Director, Sanghani Center for AI and Analytics and Director, Amazon – Virginia Teach Initiative for Efficient and Robust Machine Learning

Ming Jin

Assistant Professor, Department of Electrical and Computer Engineering

Brian Mayer

Research Manager, Sanghani Center for AI and Data Analytics

Chang-Tien Lu

Professor and Director, Computer Science Program in the greater Washington, D.C. area

Program Managers

Program Managers serve a vital role in helping the Partnership flourish. They collaborate on a regular basis, manage logistics and communications, support the development of program goals, and track and monitor partnership milestones.

Kathleen Allen

Principal, Academic Partnerships, Amazon

Wanawsha Shalaby

Manager of Operations, Sanghani Center for AI and Data Analytics